Welcome back to the Neural Net! We made it to Friday, so let’s celebrate and wrap the week with the latest in AI.

In today’s edition: MIT startup combats hallucinations, stock analysis gets an AI upgrade (sorry, no get-rich-quick tips), Reddit sues Anthropic for data theft, archaeologists use AI to date ancient artifacts, and more.

▼

The Street

note: stock data as of market close

▼

🧠 AI That Knows What It Doesn’t Know

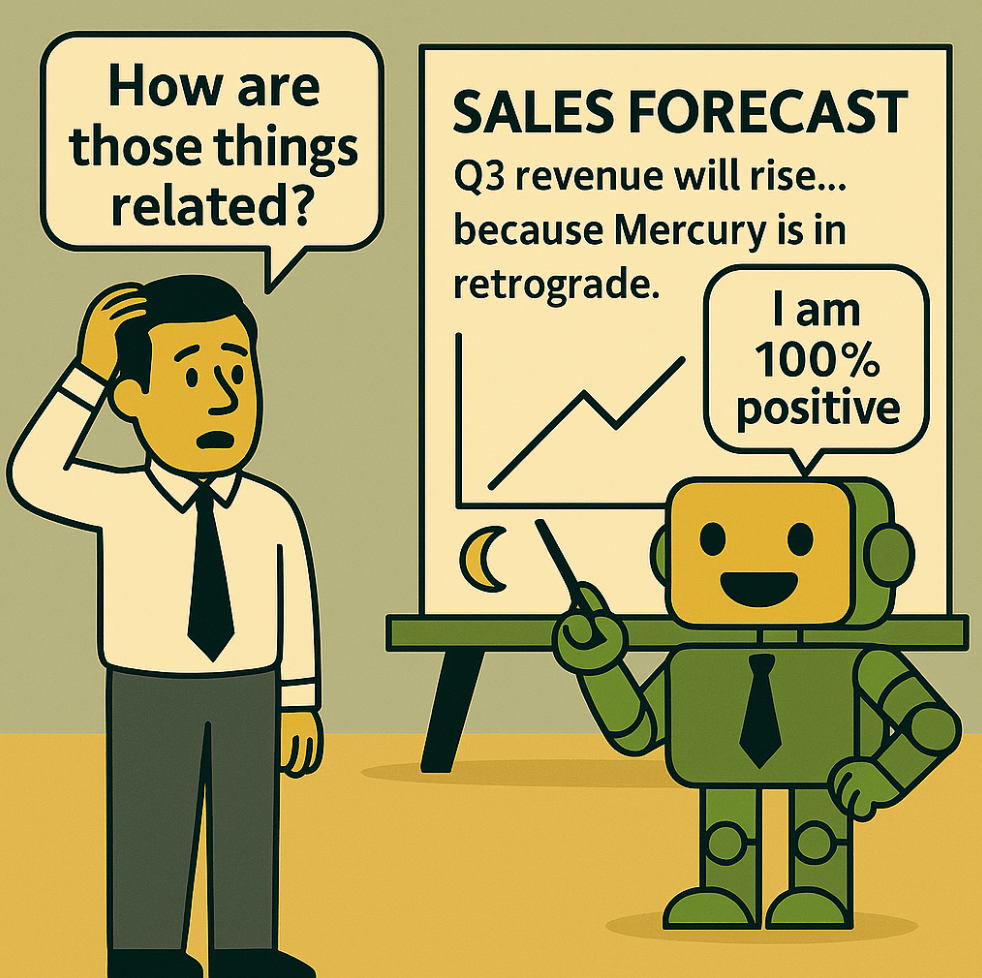

As Generative AI shows up in more business tools, you’ll start relying on its outputs to make real decisions. But if your AI tool doesn’t know when it’s wrong (aka hallucinating), you could get burned — and in fields like healthcare or autonomous driving, that kind of false confidence can be deadly.

What If Your AI Had a Panic Button?

Capsa, built by MIT researchers, acts like a built-in warning system for AI by flagging confusion before it turns into a bad decision.

It works behind the scenes to:

Highlight when an AI model is unsure or working with incomplete info

Help catch those weak points before they become mistakes

Retrain models with better data so the system gets smarter over time

Make smaller AI systems (like ones on your phone or embedded devices) more trustworthy

The Bigger Picture

As AI continues to integrate itself further into our work, knowing when to trust it becomes just as important as what it says. Capsa is part of a new wave of startups building “gut checks” into AI systems, so they can flag blind spots and avoid overconfidence.

💡Bottom line: It’s encouraging to see companies finally tackling one of AI’s most fundamental flaws: hallucinations. This is how AI gets safer, smarter, and more useful: not by knowing everything, but by knowing what it doesn’t.

Tips for Fewer Hallucinations

Until tools like Capsa are built into your AI stack, here’s how to reduce hallucinations today:

Use tools that connect to real data

→ Example: In ChatGPT, turn on "Browse with Bing" or use a RAG-powered assistant. This helps the AI use real sources.Be specific with your prompts

→ Instead of: “Write an overview of this product.”

→ Try: “Based on this website: [link], summarize the top 3 features.”Upload supporting material

→ Bad: “What’s the current return policy at Target?”

→ Good: “Here’s Target’s return policy text. Summarize it in plain English.”

▼

💡How To AI: Stocks Go Up. Excel Stays Closed.

Google just dropped another extremely handy AI tool: in Google Labs, AI Mode now lets you ask finance questions and get interactive charts with clear explanations, all in one go. Looking to compare airline performance post-pandemic? Or who beat earnings expectations? Just ask and instantly get a clean, custom visualization without digging through earnings reports.

It’s powered by Gemini’s multi-step reasoning and multimodal AI, which means it can understand your question, pull real-time and historical data, and decide the best way to show it.

It’s not just for stocks—you can use it to explore mutual funds, company performance, and market trends, too. Instant analysis, zero data wrangling or spreadsheet pain. It also doubles as a creative partner, helping you see the data from angles you might’ve missed.

▼

🧠 Stay Smart, Stay Informed

You already read the Neural Net to keep up with the latest AI and actually understand it. Why stop there? For everything else—politics, science, sports—Morning Brew delivers the news like we do: no nonsense, with a touch of humor to keep things light.

News you’re not getting—until now.

Join 4M+ professionals who start their day with Morning Brew—the free newsletter that makes business news quick, clear, and actually enjoyable.

Each morning, it breaks down the biggest stories in business, tech, and finance with a touch of wit to keep things smart and interesting.

▼

Heard in the Server Room

Palantir CEO Alex Karp called AI a dangerous but decisive force, framing the U.S.–China tech race as winner-takes-all. He says America’s ahead (thanks in part to Palantir, of course), and its Western allies better take notes. He also denied spying on Americans, brushed off stock price complaints, and positioned Palantir as the brainy bodyguard of democracy. Their current, astronomical price to earnings ratio? 545. But back to his broader warning: AI isn’t just innovation—it’s power, and who wields it will shape the future global order.

Builder.ai raised $450M claiming it was a “no-code platform designed to simplify app development”—when in reality, 700 human engineers in India were quietly doing the work. Once valued at over $1 billion and backed by Microsoft and the Qatar Investment Authority, Builder.ai has officially unraveled. It exaggerated revenue by 340%, allegedly faked sales, laid off 1,000 employees, and is now filing for bankruptcy. In a market where anything labeled “AI” commands sky-high valuations, it’s a wild reminder that not every AI startup is powered by actual AI—and it raises the question: who else is hiding behind the hype?

Reddit has filed a lawsuit against AI startup Anthropic, accusing it of scraping Reddit posts to train its Claude chatbot, despite claiming it wouldn’t. According to Reddit, Anthropic accessed the platform over 100,000 times without a license, all while presenting itself as an ethical leader in AI. While companies like Google and OpenAI signed licensing agreements, Reddit says Anthropic took the DIY route and profited without paying a cent. Now, Reddit is seeking damages and a court order to block Anthropic from using its content. And with both companies headquartered just 10 minutes apart, morning commutes might get a little tense.

▼

AI Just Dated Ancient Scrolls Without Destroying Them. That’s Kind of a Miracle.

For decades, the only way to figure out how old the Dead Sea Scrolls were was to literally tear off pieces and test them with radiocarbon dating. Not ideal when you're working with fragile, 2,000-year-old Biblical texts. (For reference, they're some of the oldest surviving records of human storytelling, law, and history.)

Now, a new AI model named Enoch is changing the game—using computer vision to analyze handwriting and estimate the scrolls’ age, no damage required.

Trained on ancient scripts paired with radiocarbon dates, Enoch learned to spot subtle shifts in handwriting over time.

It analyzed 135 undated scrolls and determined some were decades older than historians thought.

One copy of Ecclesiastes may have been written during the author’s lifetime.

And it revealed that two writing styles—previously thought to come from different eras—actually overlapped.

Why it matters: This kind of tech could help date ancient Chinese manuscripts or even disputed historical letters, all without laying a finger on the original.

Enoch might be the first archaeologist that leaves no trace.

▼

That’s it for today. Have a great weekend, and we’ll catch you Tuesday with more neural nuggets!