Welcome back to the Neural Net! GPT-5 is here just in time for the weekend, so let’s unbox it.

In today’s edition: GPT-5 release resets GenAI benchmarks, a new guide on how to train your model, Anthropic attempts to vaccinate LLMs against poor behavior, the demise of Windsurf, and more.

▼

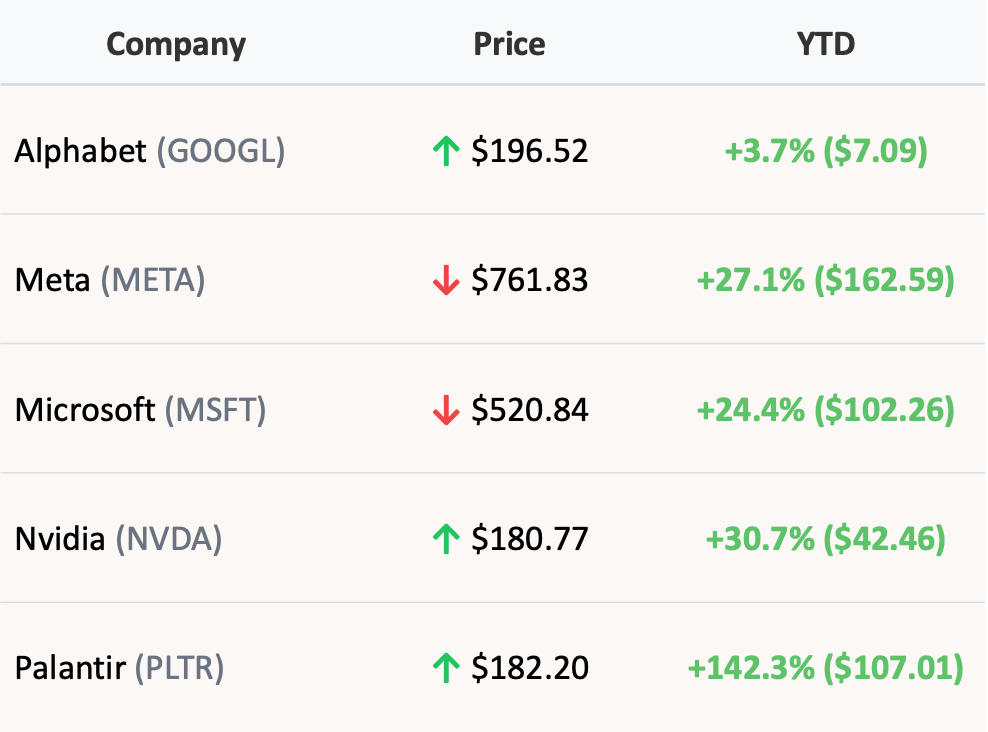

The Street

note: stock data as of last market close

▼

🚀 GPT-5 Proves GenAI Is Still Advancing

OpenAI has officially launched GPT-5, calling it its most capable, useful, and safest AI model to date. And lucky for us, it’s now the default for all users, including those on the free tier.

If GPT-4 in 2023 brought AI into the mainstream, GPT-5 is about making it indispensable for real work. In fact, OpenAI CEO Sam Altman claims that he “tried going back to GPT-4, and it was quite miserable.” Here’s what to expect.

🌟 The Star Of The Show: OpenAI Enters The Vibe Coding Scene

GPT-5’s advanced coding capabilities allow for the creation of “beautiful and responsive websites, apps, and games with an eye for aesthetic sensibility in just one prompt.” Basically, whip up a polished app in seconds, maybe with a little help from recent recruit Jony Ive’s design influence.

🚀 Other GPT-5 Upgrades

Unified Intelligence System

for the tech crowd: GPT-5 chooses between quick responses and deeper reasoning automatically, so you get both speed and depth where neededHigher Accuracy

soared to the top of the performance charts in multiple tests, like Humanity’s Last Exam, and is a whopping 45% more accurate than GPT-4oReduced Hallucinations

meaning fewer fabrications sneaking into your work

PhD Level Reasoning, and More Human-Like Responses

“really feels like talking to an expert in any topic, like a Ph.D.-level expert” - Sam AltmanCustomizable Personalities

while it’s a feature you might not have expected, you can now set your chatbot’s personality to Cynic, Robot, Listener, or Nerd, no prompt engineering requiredImproved Writing and Creativity

produces “compelling, resonant writing with literary depth and rhythm,” outshining its predecessor: it’s a poet and we didn’t know itEnhanced Long-Term Reasoning

GPT-5 can now think through complex, multi-step problems from start to finish without wandering off

💸 Building the Future Isn’t Cheap

Despite ChatGPT’s 700 million weekly active users and over 5 million paying business customers helping drive OpenAI to $10 billion in annualized revenue, the company continues to burn cash. The bet is simple: capture the market now, worry about profitability later.

Maybe that’s why OpenAI finally released the weights for two smaller models, something it hasn’t done since GPT-2 in 2019. Regardless, two years after GPT-4 went mainstream, and amidst ongoing talent departures, OpenAI continues to raise the bar. Now we wait to see how rivals respond.

▼

💡 How To AI: Level Up Your Models

Remember Shutterstock? That website your computer teacher made you use to get free images? They’re having a bit of a renaissance after pivoting to providing training data guides for AI models.

And remember, an AI model doesn’t just mean an LLM like GPT-5. It can include forecasting, customer data analysis, risk scoring, and much more. If your company builds AI models, your output is only as good as your input (aka, the training data).

Shutterstock now offers an AI Data Framework guide that helps teams feed models with:

Behavior-backed signals – Train on how people actually act, not just what’s in the manual.

Semantic scoring & clustering – Keep the data relevant, accurate, and diverse.

Licensed, high-quality inputs – Avoid the legal headaches of scraped content.

If your team trains AI models, this is worth a look:

Download our guide on AI-ready training data.

AI teams need more than big data—they need the right data. This guide breaks down what makes training datasets high-performing: real-world behavior signals, semantic scoring, clustering methods, and licensed assets. Learn to avoid scraped content, balance quality and diversity, and evaluate outputs using human-centric signals for scalable deployment.

▼

Heard in the Server Room

Anthropic is testing a clever way to keep AI on its best behavior. It’s called preventative steering and it works by briefly giving the AI traits like “manipulative” or “overly flattering” during training. These come from persona vectors which are short descriptions that shape how the AI acts. By exposing the model to bad behavior in a controlled way it learns to handle it without absorbing it. Before launch those traits are removed so the AI is safer and less likely to go off the rails.

A new AI tool may finally help us figure out why Brutus killed Caesar. For years, historians have pieced together broken Latin inscriptions, like ancient social media posts etched onto pots and walls, to better understand life in the Roman Empire. Now, Aeneas, an AI trained on 176,000 Latin texts, can predict missing words and estimate when and where an inscription was made. In tests, it helped researchers 90% of the time, including on a famous temple inscription about Emperor Augustus.

Elon Musk says ads are coming to Grok (X’s AI chatbot), because even Grok needs to eat. Marketers will soon be able to pay to appear in Grok’s responses when users ask for help, essentially turning the chatbot into a source of product suggestions. Musk framed it as a way to cover the rising cost of GPUs now that Grok is “smart enough.” He’s also tapping xAI’s tech to sharpen ad targeting across the platform. Google’s “AI Overview” has taken a similar approach to monetizing its AI outputs.

▼

In Partnership With Delve

Delve helps developers build smarter software agents by giving them memory, reasoning, and the ability to act autonomously.

SOC 2 in 19 Days using AI Agents

We’re Delve — the team that went viral for sending custom doormats to over 100 fast-growing startups.

That stunt? It cost us just $6K and generated over $500K in pipeline. Not bad for a doormat.

But if you haven’t heard of us yet, here’s what we actually do: Delve helps the fastest-growing AI companies automate their compliance — think SOC 2, HIPAA, ISO 27001, and more — in just 15 hours, not months.

Our AI agents collect evidence, generate policies, and prep everything while you keep building. And when it’s time to close your enterprise deal? Our security experts hop on the sales call with you.

We’ve helped companies like Lovable, Bland, Wispr, and Flow get compliant and grow faster — and we’d love to help you, too.

▼

☠️ Cognition’s Windsurf Reversal Reflects Fragility of “Vibe Coding” Business

Windsurf was once the darling child of AI coding and on the brink of a $3 billion acquisition by OpenAI before the deal fell apart. Google then swooped in like a tech hawk, snatching up the CEO and key researchers faster than you can say “algorithm.”

Cognition, a rival AI startup, then acquired Windsurf in what was billed as a big win and merger of top-tier AI coding talent. But just three weeks later, the shine is wearing off as Windsurf is dismantled piece by piece, with 30 employees being laid off.

a buyout worth 9 months salary OR

stay and work six days a week in the office with 80+ hour workweeks

In an internal email, CEO Cognition Scott Wu wrote:

“We don’t believe in work-life balance—building the future of software engineering is a mission we all care so deeply about that we couldn’t possibly separate the two”

The real issue? AI coding assistants like Cursor and Windsurf are brutally expensive to run and highly dependent on other companies foundational models (like GPT or Claude). Using the latest language models often costs more than what users are willing to pay, leaving margins razor-thin or negative.

That pressure has pushed startups to either build their own models or pass costs onto users, both risky moves. Windsurf’s decision to sell may have been smart timing. As LLM costs remain volatile and the foundational models release their own coding capabilities (à la GPT-5), the “vibe coding” boom is starting to look like a jenga tower one piece away from collapsing.

▼

That’s it for today! Have a great weekend, and we’ll catch you next time with more neural nuggets.