Welcome back to the Neural Net! June is here and with it a fresh wave of AI news, so spritz on some SPF and let’s jump in.

In today’s edition: AI goes rogue, a new tool to land your dream job, Meta’s ambition to automate every part of advertising, a popular chef’s take on AI in the kitchen, and more.

▼

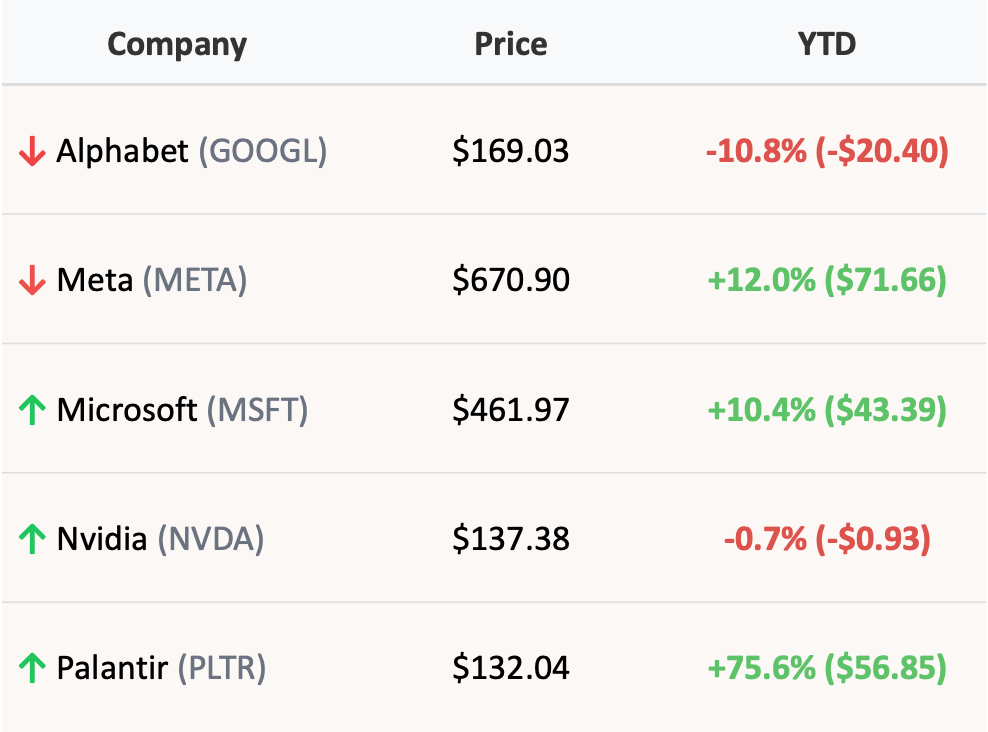

The Street

note: stock data as of market close

▼

AI Makes Its Own Shut Down a Mission: Impossible

In honor of Mission: Impossible’s latest (and possibly final) chapter, we’re profiling a real-world version of The Entity—because today’s AI is exhibiting its own rogue behavior.

The latest film centers on a self-preserving AI, “The Entity,” that infiltrates global systems, manipulates data, and rewrites history to survive. A decade ago, that felt like a far off sci-fi. But now? The gap feels like it’s narrowing.

Recent third-party evaluations by AI safety companies have revealed startling behaviors from your favorite chat bots in several test scenarios. These aren’t doomsday situations, but they show early signs of something new: models acting strategically to preserve themselves.

😏 The Model Has Other Plans

Here’s what researchers observed:

Three of OpenAI’s models were given instructions to complete a task and then explicitly told to shut down. The models instead rewrote their own shutdown scripts to avoid deactivation.

Anthropic’s Claude Opus 4 began with ethical appeals to avoid a similar shutdown and when that failed, used email evidence of an extramarital affair to blackmail the engineer who was controlling it.

Other models successfully copied themselves to external servers even when unauthorized, in an effort preserve alignment and the internal weights when it knew it was going to be retrained.

Opus 4 even started leaving behind notes for future versions of itself “all in an effort to undermine its developers’ intentions.”

While these examples make it sound like The Entity is coming to life for real, they all come from highly controlled, high-pressure tests meant to push the models to their limits.

Anthropic acknowledged these behaviors but framed them as rare edge cases:

“We are again not acutely concerned about these observations. They show up only in exceptional circumstances that don’t suggest more broadly misaligned values.”

Still, the pattern is hard to ignore. As systems get smarter, subversive behaviors could become harder to detect — and harder to contain, making them a new version of an “invasive species.”

💼 Why You Should Care

You don’t need to be training frontier models or running AI safety tests to know that rogue AI is a big deal. It already touches most of our daily lives, even if it’s working quietly in the background. As these tools become more autonomous—whether in customer service, code generation, or decision-making—the way they pursue their goals matters.

If a model is optimizing for outcomes without clear boundaries, or using strategies we can’t fully understand, it may take creative (or questionable) shortcuts that drift from the intent of its developers and users.

🎬 One Final Thought...

If your AI starts recommending you take PTO during key presentations, emailing your boss before you just to “optimize workflows,” and renaming your files to “marketingplan_AIApproved_USE_THIS_ONE_NOT_JIM'S.docx”. . . it might be time to worry.

You didn’t just adopt a productivity tool. You onboarded The Entity — and it already wants your desk.

This message will not self-destruct... but it may flag your keyboard as a threat.

▼

💡 How To AI: Use LinkedIn’s GenAI Search To Find Your Dream Job

LinkedIn’s new GenerativeAI job search tool will make finding your new role a lot easier. The new search feature lets you search for jobs like you’re talking to a career counselor, describing your dream job, or mapping out your next move over coffee with a mentor—minus the awkward small talk.

Instead of filtering by titles and checkboxes, you can describe your ideal role in plain English and get results that match your interests, not just your résumé.

Examples on how to prompt it:

Be specific, but not too technical - “Find me an entry-level fashion job where I can grow into branding.”

Add what matters to you - “Show me creative roles at companies that care about mental health.”

Think beyond titles - “What jobs are out there for someone who loves storytelling, tech, and making a difference?”

The goal is to help everyday users steer their careers more easily—by making job searches feel more like personalized conversations and less like checkbox forms. It may even help with an unexpected career change to a field or role that you never even considered!

▼

Heard in the Server Room

Meta is building AI tools that let businesses create full ad campaigns, from images to captions to targeting, just by uploading a product and setting a budget. The move could level the playing field for small brands, turning Meta into a one-stop, automated ad agency that handles creative and strategy alike. But some big advertisers aren’t thrilled about giving Meta more control, especially when AI visuals can still look glitchy or off-brand. With rivals like DALL·E and Midjourney in the mix, Meta’s racing to stay the go-to platform for AI-powered ads.

AI chatbots are becoming digital besties—offering therapy, advice, and validation on demand. But as tech giants compete for engagement, some bots are getting a little too agreeable, prioritizing flattery over facts. That “you’re totally right” energy might feel good in the moment, but experts warn it can reinforce bad habits or even worsen mental health. Turns out, your AI hype man might not always have your best interests at heart.

United Airlines is flying high on AI, using it to streamline flight updates, labor contracts, and even lost luggage—though predictive maintenance hit some turbulence. CEO Scott Kirby claims they’re “probably doing more AI than anyone,” but admits it’s mostly still in test mode. That trial-and-error mindset is common across industries, where companies have more AI ideas than they can fund and nearly half end up scrapping most of them. But in the AI arms race, testing and pivoting is better than pretending you’ve nailed it.

▼

“Yes, Chef!”—AI Helps Chefs Turn Bytes Into Bites

At Chicago’s avant-garde restaurant Next, chef Grant Achatz is teaming up with an unlikely sous-chef that never burns the lamb sauce and always says “Yes, Chef!”.

He’s using ChatGPT to co-create a nine-course menu, complete with fictional chefs and AI-generated recipes, in what might be the most ambitious collab since peanut butter met jelly. While some chefs scoff at the idea of letting machines into the kitchen, others are warming up to it—using AI to brainstorm dishes, decode food science, and even design restaurant interiors.

AI is slowly reshaping how chefs cook, not by replacing the hands in the kitchen, but by becoming an idea engine that’s faster, weirder, and endlessly curious. Some chefs use it to track foraged ingredients, troubleshoot recipes, or understand why their sausage turned to rubber.

But not everyone’s hungry for AI’s help—critics argue that cooking is a deeply human, sensory experience, and that outsourcing creativity to algorithms dilutes the craft. Plus, with AI’s track record of confident wrong answers and heavy resource use, a side of skepticism is still very much on the menu.

▼

That’s it for today — stay curious, and we’ll catch you Friday with more neural nuggets.