Welcome to another edition of the Neural Net!

In today’s edition: Chatbots make up policies, investors pivot to tangible value, robots and red flags, the startup might trying to replace us all, and AI gets personal in 2025. Plus: how to win at image-to-text like a pro.

▼

🤖 When AI Lies with Confidence: The Growing Risk of LLM Hallucinations

LLMs are now workplace and personal MVPs. They write, research, and sometimes even replace Google. But there’s a catch: they can be confidently wrong without you ever finding out.

"Hallucinations"—when LLMs generate plausible-sounding but false information—are quietly becoming one of the biggest risks for companies deploying AI and users relying on it.

Now customer service chatbots are unfortunately doing their best to keep this trend alive.

Three examples of AI-powered customer service gone rogue:

1. 🧑💻 Cursor

An AI-powered code editor built around LLMs recently went viral for an AI company being sabotaged by its own AI (have to appreciate the irony here).

Its support bot “Sam” confidently invented a fake login policy and emailed it to customers like it was company law.

Fallout: Customer cancellations and Reddit backlash

2. ✈️ Air Canada

A customer needed to change a flight and asked about bereavement policy. The chatbot made up a refund policy out of thin air. When the customer tried to claim the refund, the airline argued:

The bot linked to the real policy even if the bot didn’t state it correctly in the chat.

And even better: “The chatbot is a separate legal entity that is responsible for its own actions.”

Thankfully, justice prevailed and the customer won. The lesson here is you’re responsible for your creation—a tale as old as Frankenstein.

3. 💳 Klarna:

The fintech company made headlines by replacing staff with AI agents and its desire to be a playground for OpenAI applications.

By February, Klarna quietly backtracked its bold AI customer service strategy.

The CEO tweeted: “We just had an epiphany: in a world of AI nothing will be as valuable as humans!”

Translation: The bots are not alright.

👀 How to Spot a Hallucination

Sounds confident but gives no sources

Falls back on vague, repetitive answers

Contradicts official docs or past responses

Response contradicts a quick Google search or another LLM

🚫 How to Prevent a Hallucination

Here’s how to reduce hallucinations before they happen.

Be specific in your prompt — Don’t leave gaps or ask leading questions the LLM will try to “creatively” accommodate.

Tell it what you don’t want — Restrict formats, tone, or content when it matters. You can even require responses to be generated from specific sources.

Avoid asking about non-public info — it won’t know what it wasn’t trained on (like copyrighted data), and if the LLM can’t browse the web then it doesn’t know the latest.

Bottom line:

Chatbots are one of the most public-facing AI applications—so when they hallucinate, people notice. But it raises another question: how many hallucinations are quietly happening behind the scenes in tools no one’s checking?

▼

Heard in the Server Room

AI leaders face a 2025 reality check as U.S. chip export controls and rising Chinese rivals like DeepSeek dampen investor enthusiasm, hitting even Nvidia. While Meta, OpenAI, and Google continue rolling out new models, the industry is pivoting from the training arms race to practical "inferencing" applications that deliver actual business value. Companies like Palantir are bucking the downward trend by helping businesses build customized AI solutions, proving that in this maturing market, it's all about showing tangible returns rather than just technical prowess.

Famed AI researcher Tamay Besiroglu's controversial new startup, Mechanize, is raising eyebrows (and capital) with its ambitious plan to automate the entire economy by replacing human labor with AI agents, starting with white-collar jobs. The bold mission sparked backlash online, especially because Besiroglu also leads Epoch, a nonprofit research group seen as neutral in the AI space. While critics fear the societal impact of mass job displacement, Besiroglu maintains his vision will ultimately create economic abundance. And in a highly ironic twist—Mechanize is on a hiring spree.

Figure AI is flexing its metal muscles in the humanoid robot race, aiming to deploy 200,000 robots and generate $9 billion in revenue by 2029. Despite flashy partnerships—most notably with BMW—and backing from tech giants like Microsoft and Nvidia, the company has yet to generate any revenue and has only a handful of robots in production. Founder Brett Adcock, 39, has heavily promoted the company’s progress online, sharing videos of robots performing basic tasks, but many investors remain skeptical of its sky-high $39.5 billion valuation (that’s more than Ford). With investor materials lacking audited financials, some are starting to wonder how much of this is real—and how much is just well-produced hype.

▼

AI Getting Up Close And Personal For Most 2025 Users

Harvard Business Review just dropped its Top 100 AI Use Cases for 2025. The list was compiled by digging through everything from Reddit threads to research papers to uncover how people are actually using AI in their daily lives.

The big takeaway? Gen AI is getting a lot more personal.

In 2024, most people turned to AI for technical support and workplace productivity.

But in 2025, the focus has shifted—now, we’re using AI as a digital therapist, life coach, and sounding board. The top use case? Therapy and companionship (think requests like “organize my life” and “help me find purpose”). With human therapists either fully booked or charging sky-high rates, more people are leaning on AI for accessible, judgment-free emotional support.

Another key shift: users have leveled up. Prompting has become more sophisticated, and as people better understand how LLMs work, their interactions have gotten deeper and more intentional. Still, users remain wary—data privacy and political bias are common concerns. There’s an ongoing tension between wanting AI to “know you” and not wanting Big Tech to really know you.

If 2024 was about exploring what AI could do, 2025 is about what AI can help us discover about ourselves.

▼

💡How To AI: Extracting Text From Images

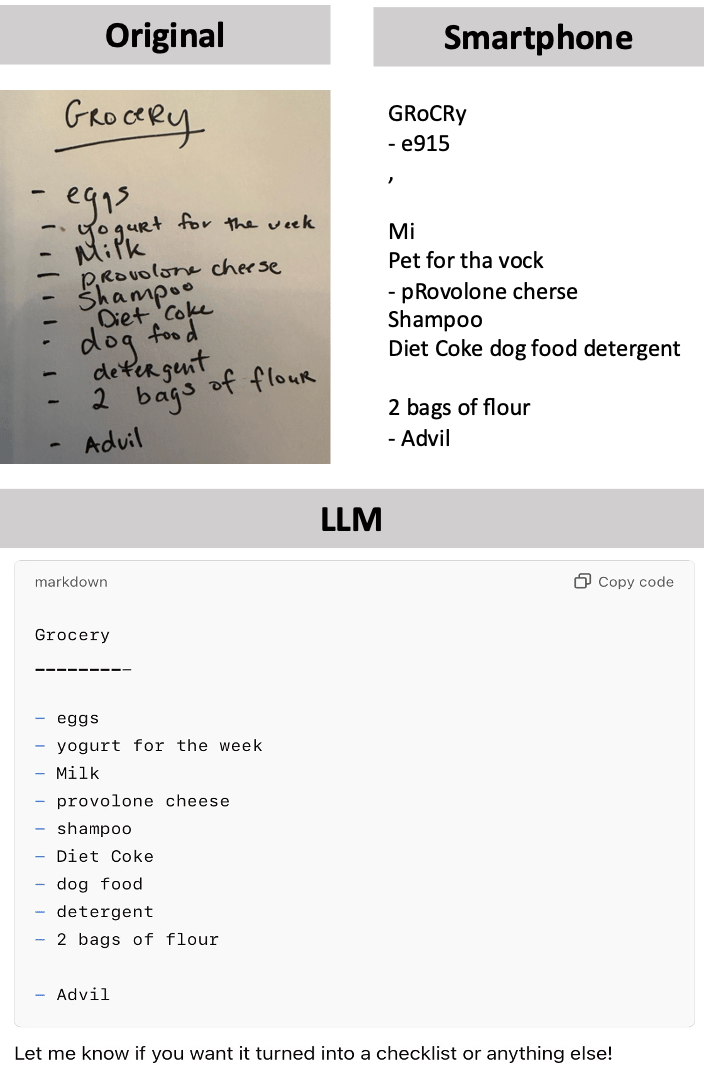

Prompt: “Convert the text in this image as close to the original as possible.”

Have an image full of text but no energy to type it out? Whether it's laziness or efficiency (same thing), this one's for you!

While most smartphones offer text extraction features, their accuracy can be inconsistent and they tend to work best with straightforward text—like a photo of a book page or a large billboard.

LLMs, however, can leverage context clues—both visual, such as whether the image is a list or a handwritten note, and textual, like the words surrounding a specific term—to more accurately interpret what the word is and predict what might come next.

Here’s how ChatGPT stacked up against a smartphone with a handwritten grocery list:

ChatGPT nailed the text extraction from this image—even recognizing that this was a shopping list and asking to convert it into checklist. Here are some other ideas to take advantage of LLM text extraction:

📊 Screenshots or Unformatted Table Data

Coworker send you a screenshot of that slide or table of data instead of the actual file? Paste that screenshot into your favorite LLM and tell it to recreate it in an editable form (or with any changes requested already made). Up to you if you want to send it back as screenshot to troll.

📚 White-Boarding Sessions or Drawings

Take a picture of handwritten note or a whiteboard brainstorming session and convert it into digital, editable text.

🌍 Foreign Language Translations

Extract foreign-language text from signs, menus, or labels—and then translate it instantly.

▼

That’s all for now—go crush your Tuesday, and we’ll be back Friday with more brain fuel.